Create the LUNs for the cluster

So before we deploy the cluster we need to create the shared disks that the cluster will use. The layout that you want to use will depend on the recommendations from your storage vendor. The layout will also be affected by whether or not you are doing an extended distance cluster. I am setting this up on Dell Compellent storage, so I followed the documentation in the Dell Storage Center and Oracle12c Best Practices and Dell Compellent – Oracle Extended Distance Clusters.

About the storage I used

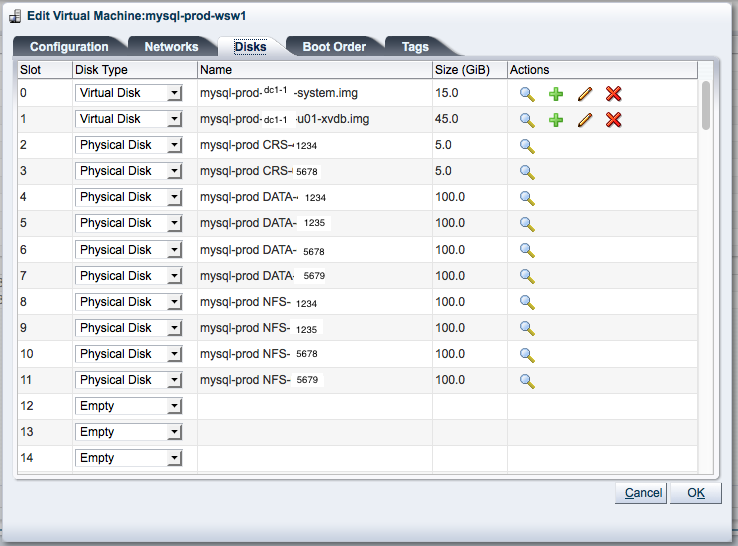

Compellent systems are Active/Passive so for best performance and easy manageability we want 1 LUN from each controller going to a disk group. Each Compellent controller has a unique serial number so I decided to use them in the name of the disk to help with identification. For example, lets say serial numbers 1234 and 1235 controller pairs in one system and 5678 and 5679 are pairs in another. Another notable thing about this storage is that Dell recommends to not use partitions on the ASM disks. That way you can easily grow them in the future and not need to add additional LUNs for growth.

LUNs for Extended Distance Cluster

- CRS Diskgroup (used for voting files and cluster registry)

- mysql-prod CRS-1234 – 5 GB Tier 1 Storage

- mysql-prod CRS-5678 – 5 GB Tier 1 Storage

- DATA Diskgroup (used to store MySQL databases)

- mysql-prod DATA-1234 – 100 GB Tier 1 Storage

- mysql-prod DATA-1235 – 100 GB Tier 1 Storage

- mysql-prod DATA-5678 – 100 GB Tier 1 Storage

- mysql-prod DATA-5679 – 100 GB Tier 1 Storage

- NFS Diskgroup (used to store HA NFS shares)

- mysql-prod NFS-1234 – 100 GB All Tiers

- mysql-prod NFS-1235 – 100 GB All Tiers

- mysql-prod NFS-5678 – 100 GB All Tiers

- mysql-prod NFS-5679 – 100 GB All Tiers

We will let ASM handle the mirroring of disks between the two sites. We will also need to make sure that volumes from 1234 and 1235 are in the same failgroup and volumes from 5678 and 5679 are in another failgroup. We are essentially striping across the volumes for performance in the same failgroup and mirroring between failgroups.

We need a separate diskgroup called CRS because of Doc ID 1992968.1/Bug 20473959. If we used the DATA diskgroup for the voting disks, adding the NFS quorum disk would make it so we couldn’t use ACFS on that diskgroup. Without ACFS, there wouldn’t be a filesystem to store our MySQL databases on.

The LUNs mentioned above need to be exported to BOTH Oracle VM clusters. You will also need a LUN in each datacenter presented only to the local site to host the Repository for the VMs as well.

The NFS diskgroup isn’t a necessity. It is there so that you can also create HA NFS file shares hosted by the cluster. If you don’t have a need for that, then feel free to skip it.

LUNs for a regular cluster

- DATA Diskgroup (used to store MySQL databases)

- mysql-prod DATA-1234 – 100 GB Tier 1 Storage

- mysql-prod DATA-1235 – 100 GB Tier 1 Storage

- NFS Diskgroup (used to store HA NFS shares)

- mysql-prod NFS-1234 – 100 GB All Tiers

- mysql-prod NFS-1235 – 100 GB All Tiers

Since we are just doing a regular cluster in this case, we don’t need a CRS diskgroup as we do above. You also would want to use external redundancy for the disk groups as the storage array is already providing redundancy. We want to have a LUN on each controller to stripe across for better performance. The voting disk will just be one of the disks in the DATA diskgroup.

Again, the NFS diskgroup isn’t a necessity. It is there so that you can also create HA NFS file shares hosted by the cluster. If you don’t have a need for that, then feel free to skip it.

Create the virtual machines

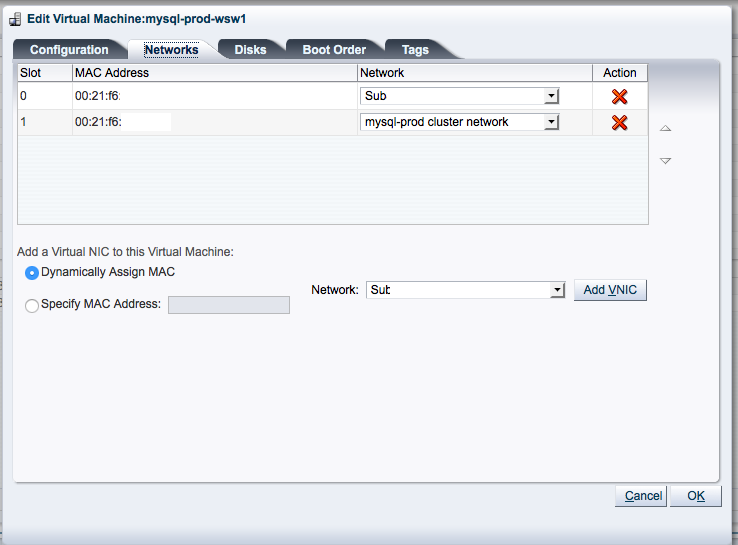

We need to clone the template we want into a virtual machine running in each datacenter. For this example, lets call them mysql-prod-dc1-1 and mysql-prod-dc2-1 (where dc1 and dc2 are unique identifiers for the datacenter they are in). If you are not making an extended distance cluster, then maybe mysql-prod-1 and mysql-prod-2 are better names. Once that is done we need to edit the virtual machines. We need to set the first network interface to be on the public network that we want to use for the cluster. The second network interfaces needs to be on the private network we are using for cluster heartbeats.

We also need to assign the ASM LUNs as physical disks to both virtual machines. They need to be assigned in the same order on both. Obviously, if you are not doing an extended distance cluster you will not have as many LUNs to present to the virtual machines. The important thing is that the shared LUNs are in the exact same slots on both machines.