Deploy the regular cluster

You will be much better off following the instructions for deploycluster for deployment than following the directions farther down. They will still work, but are quite a bit more manual.

I have posted an example netconf.ini and params.ini that you could use with a little bit of tweaking. The deploycluster zip file has some sample params.ini and netconfig.ini you can look over as well. You would probably want to reduce the number of disks used in params.ini and change the diskgroup redundancy as well.

One thing to note is that you need to have a DNS entry that resolves to at least one IP for whatever you specify as SCANNAME in netconf.ini. We won’t be using SCAN as we aren’t deploying an Oracle Database, but as far as I know there is no way to remove it/shut it off.

You will want to skip part 4 of this series because it documents changes only necessary for an extended distance cluster.

Deploy an extended distance cluster

Send VM Messages

Now that we have our virtual machines provisioned, we can power them on. Normally, one could use the deploycluster tool from Oracle to configure the virtual machines, but since there are multiple Oracle VM Managers involved we have to do it manually. This manual deployment was probably the hardest part to figure out – its not very well documented. This blog entry from Bjorn Naessens was my best resource. I recommend you still download the deploycluster tool as it has better examples/documentation of the answer files I am using here.

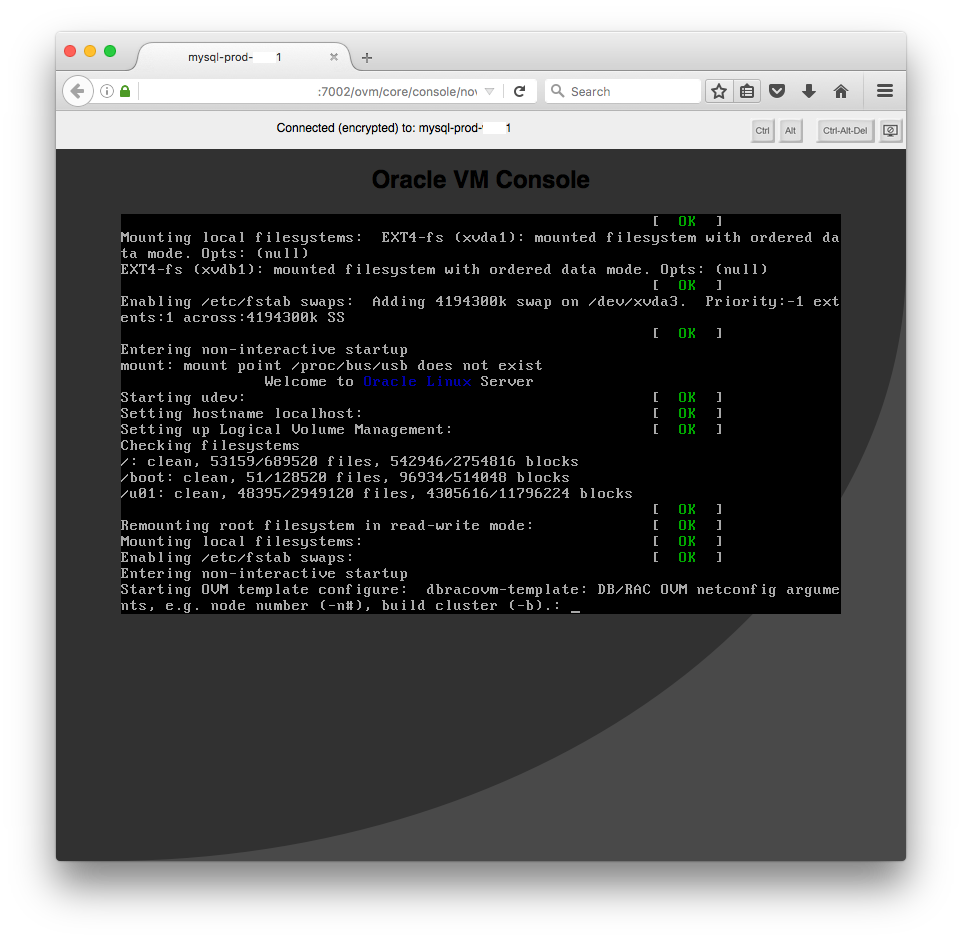

After power up you are greeted with this:

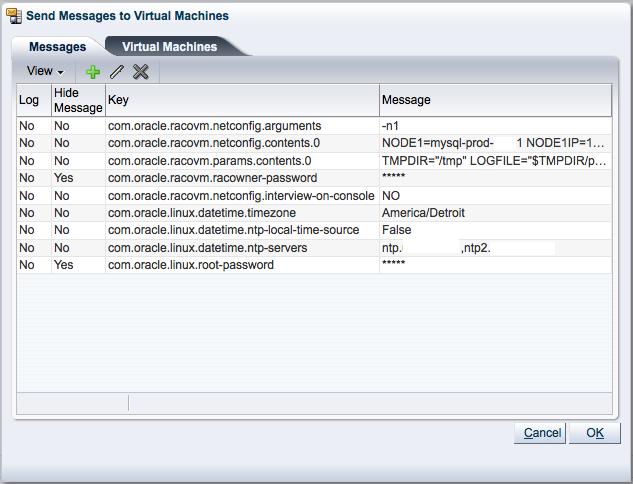

You could manually type a bunch of stuff into the console of each machine, but it is much easier to use Oracle VM Messages. Select the VM in Manager and press the “Send VM Messages” button.

To do a message based/manual deployment you need to send the following messages to the VM:

com.oracle.racovm.netconfig.arguments == -n#- # is the node number for the VM matching in netconfig.ini

com.oracle.racovm.netconfig.contents.0 == <The contents of the netconfig.ini file>com.oracle.racovm.params.contents.0 == <The contents of the params.ini file>com.oracle.racovm.racowner-password == <password for the oracle user>com.oracle.racovm.gridowner-password == <password for the grid user (only needed if doing privilege separation)>com.oracle.racovm.netconfig.interview-on-console == "NO"com.oracle.linux.root-password == <password for the root user>- This should be the last message sent

Optionally you could use these messages as well:

-

com.oracle.linux.datetime.timezone == "America/Detroit" -

com.oracle.linux.datetime.ntp-local-time-source = "False" -

com.oracle.linux.datetime.ntp-servers == "ntp.example.com,ntp2.example.com"

Here is an example of the messages I sent to one of the nodes:

Build the cluster

The netconf.ini and params.ini will need to be tweaked for your environment. One thing to note is that you need to have a DNS entry that resolves to at least one IP for whatever you specify as SCANNAME in netconf.ini. We won’t be using SCAN as we aren’t deploying an Oracle Database, but as far as I know there is no way to remove it/shut it off.

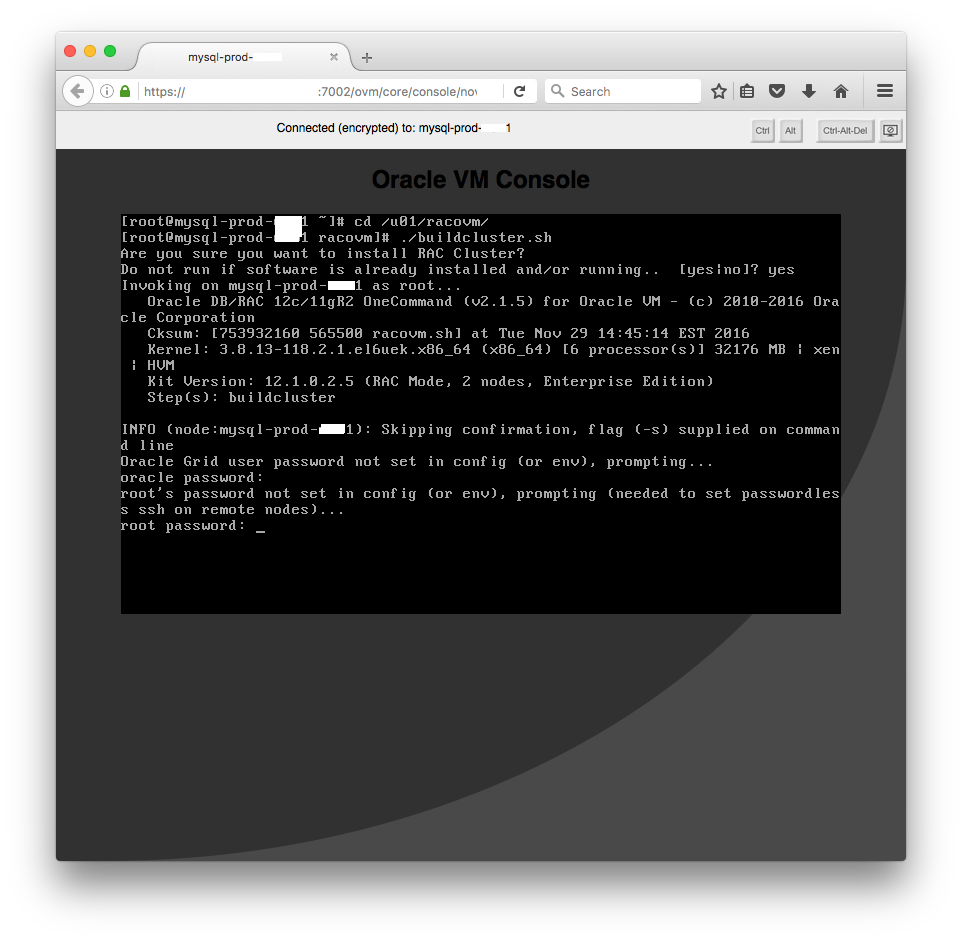

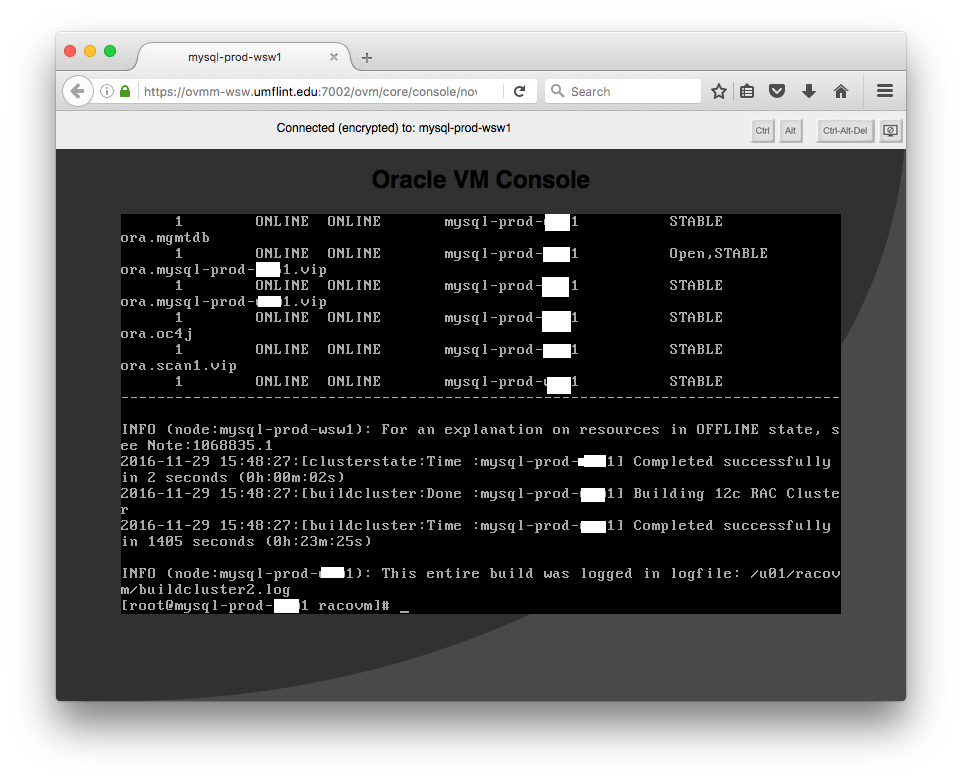

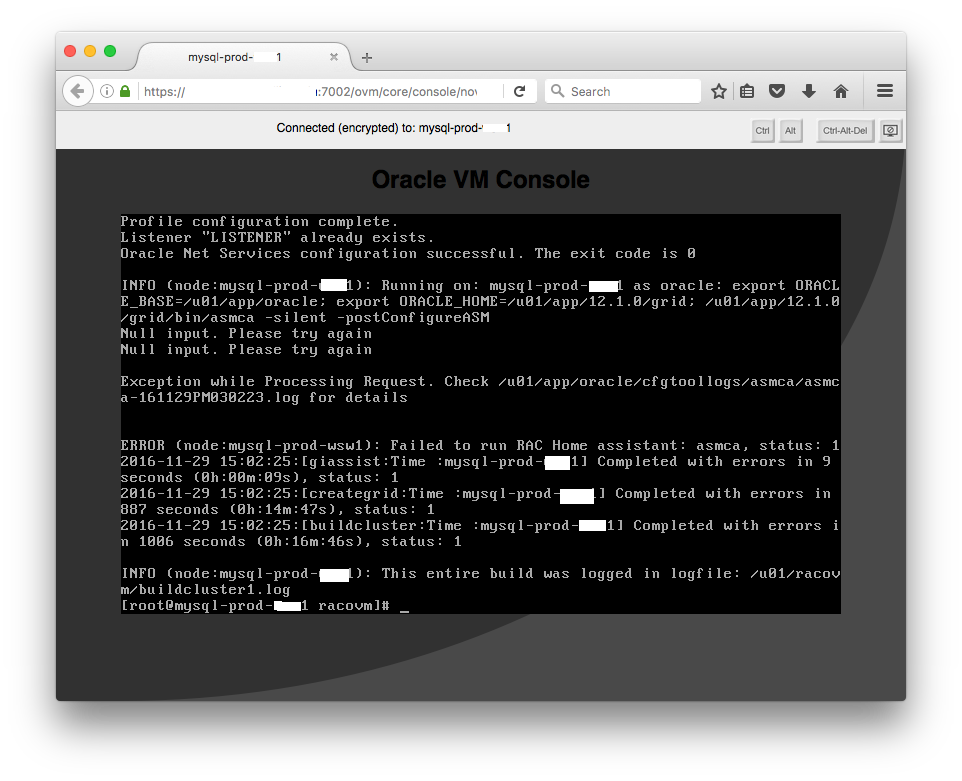

Once both nodes are up and at a login screen you can log into one of them and start provisioning the cluster. Just run buildcluster.sh and provide the requested passwords and off it goes.

If all goes well, you should have a cluster in around 25 minutes.

Troubleshooting

If you see an error about asmca failing, it is probably because there appears to be a bug with password prompt. If RACPASSWORD is commented out in params.ini, then buildcluster.sh is supposed to prompt you for the password. It does prompt you, but it seems to fail like this with a Grid Infrastructure only deployment. The work around is to set the value of RACPASSWORD on the node you are running buildcluster.sh from. Once the cluster is up, you can delete or comment out that line. You could also put that line in params.ini that you send as a message to the VMs, but I try to avoid plain text passwords personally.